Sonatype Nexus Repository

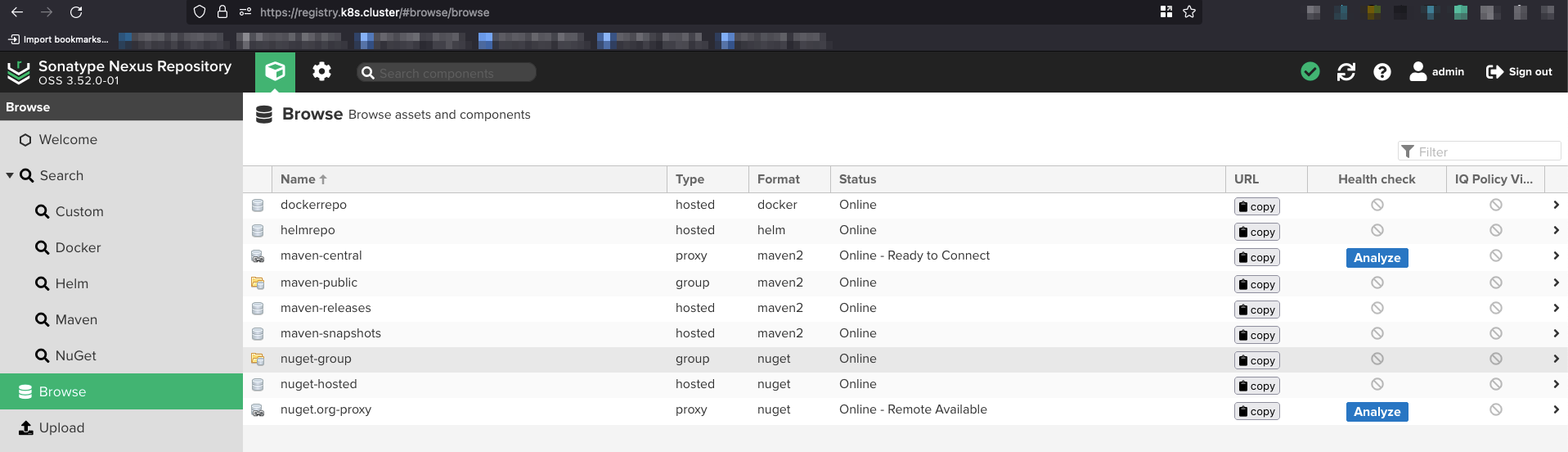

A repository is a server where artifacts that are used in the build process or produced as the output of the build process are stored. There are various paid and opensource repository solutions. Github and Gitlab often offer a repository as well. JFrog is a great software repository with decent OSS version. Sonatype offers a lot of repository options for maven, npm, apt, docker, helm and a lot of others which are not generally offered by JFrog on their OSS version. Also a docker image of Sonatype Nexus Repository is available making it a prefect candidate to run repository on kubernetes. We are going to run Sonatype Nexus on kubernetes cluster.

Prepare a persistent storage

Longhorn is used as the persistent storage provider for this setup. Create a PVC (Persistent volume claim) claiming some space to be used by the sonatype nexus repository to store artifacts. We have allocated 10GB as the persistent storage for the registry.

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: registry-pvc

spec:

storageClassName: longhorn

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

Prepare nexus deployment with an InitContainer

The specific purpose of an initContainer is to set the permission and user group on the data volume used by Sonatype Nexus repository.

apiVersion: apps/v1

kind: Deployment

metadata:

name: nexus

namespace: registry

spec:

replicas: 1

selector:

matchLabels:

app: nexus-server

template:

metadata:

labels:

app: nexus-server

spec:

initContainers:

- name: chown-nexusdata-owner-to-nexus

image: busybox

command: ['chown','-R','200:200','nexus-data']

volumeMounts:

- name: nexus-data

mountPath: /nexus-data

containers:

- name: nexus

image: sonatype/nexus3:latest

resources:

limits:

memory: "4Gi"

cpu: "1000m"

requests:

memory: "2Gi"

cpu: "500m"

ports:

- containerPort: 8081

name: admin-port

- containerPort: 8082

name: docker-port

volumeMounts:

- name: nexus-data

mountPath: /nexus-data

volumes:

- name: nexus-data

persistentVolumeClaim:

claimName: registry-pvc

The busybox InitContainer changes the ownership of the data volume mounted on /nexus-data to nexus user. The InitContainer just sets the stage for the rest of the deployment to start. We have used different ports for accessing admin interface and docker registry on the Sonatype nexus repository.

Prepare service definition and ingress definitions

The service definition exposes ports 8081 as the administrative port and 8082 as the docker registry port for the repository.

apiVersion: v1

kind: Service

metadata:

name: nexus

spec:

ports:

- name: admin-port

port: 8081

protocol: TCP

targetPort: 8081

- name: docker-port

port: 8082

protocol: TCP

targetPort: 8082

selector:

app: nexus-server

type: ClusterIP

status:

loadBalancer: {}

The Ingress definitions allow us to use hostnames instead of the ports to access the services from outside the cluster.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: sonatype-ingress

namespace: registry

annotations:

cert-manager.io/cluster-issuer: ca-issuer

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/proxy-read-timeout: "600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "600"

# type of authentication

# nginx.ingress.kubernetes.io/auth-type: basic

# name of the secret that contains the user/password definitions

# nginx.ingress.kubernetes.io/auth-secret: basic-auth

# message to display with an appropriate context why the authentication is required

# nginx.ingress.kubernetes.io/auth-realm: 'Authentication Required '

spec:

ingressClassName: nginx

rules:

- host: registry.k8s.cluster

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nexus

port:

number: 8081

tls:

- hosts:

- registry.k8s.cluster

secretName: registry-tls

- host: hub.k8s.cluster

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nexus

port:

number: 8082

tls:

- hosts:

- hub.k8s.cluster

secretName: hub-tls

The annotations used rely on cert-manager based setup to generate TLS certifcates on the fly. More on cert-manager coming on the next blog article.